From its position as provider of the technology with the potential to deliver killers generative AI apps throughout global economies, governments and cultures, Nvidia took the stage today at its GTC conference here in San Jose, and in so doing, Nvidia took center stage in the tech and business worlds.

In all, the company continues to advance across a range of hardware, software and business alliances with the goal of consolidating and extending its leadership in AI technologies, creating the perception of a company that, standing on the shoulders of decades of generally excellent execution, continues to pour out new products, partnerships and innovations that put immense pressure on competitors striving to take on Nvidia in the realm of accelerated computing.

This year’s GTC, the first to be held on-site since the Covid-19 pandemic, comes after a 16-month run for Nvidia of extravagant success, since the onset of generative AI enabled by its GPUs. Nvidia stock, priced at $128 in October 2022, is currently at $885.

Today, Nvidia CEO Jensen Huang took the stage for a two-hour GTC keynote event and victory lap (pictured above), which was held before a sold-out crowd at the 17,500-seat SAP Center, home of the San Jose Sharks of the National Hockey League.

Today’s news begins with Blackwell, which Nvidia said delivers six “revolutionary” technologies that together enable AI training and real-time LLM inference for models scaling up to 10 trillion parameters.

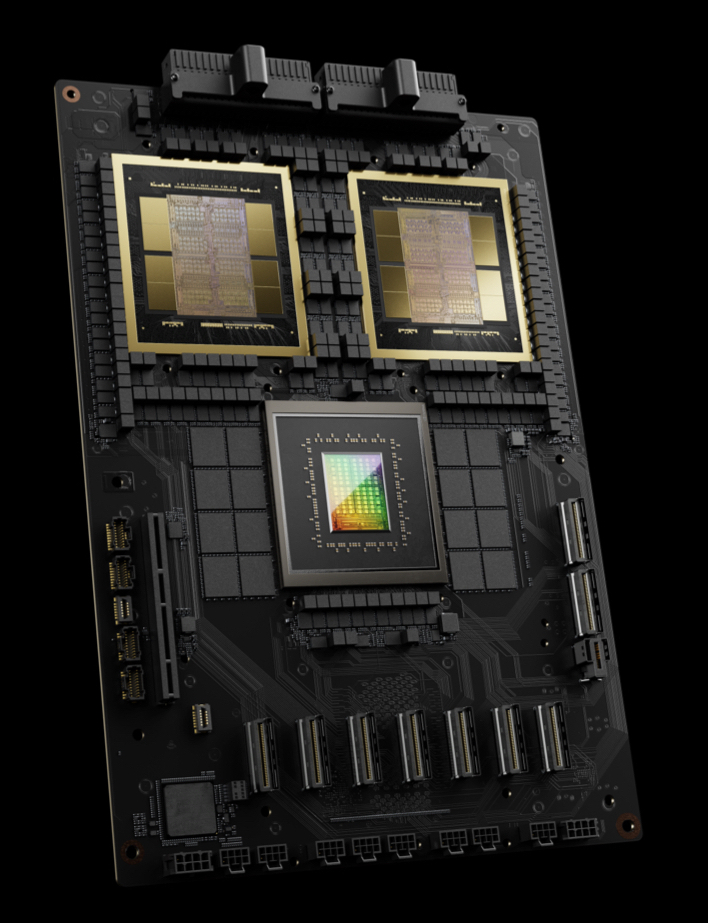

Blackwell chips are comprised of 208 billion transistors and are manufactured using a two-reticle limit 4NP TSMC process with GPU dies connected by 10 TB/second chip-to-chip link into a single, unified GPU.

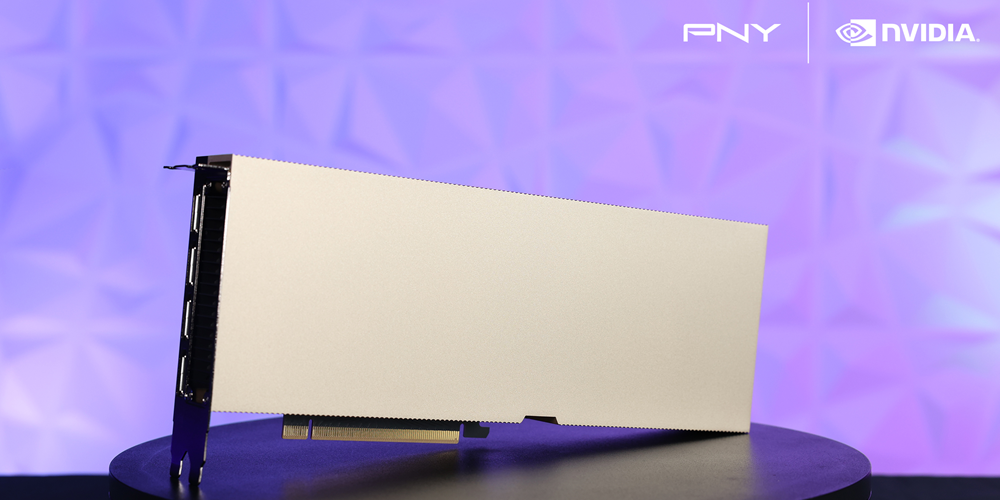

Nvidia Grace Blackwell GB200 chip

Blackwell’s second-generation transformer engine is fueled by micro-tensor scaling support and NVIDIA’s “dynamic range management” algorithms integrated into NVIDIA TensorRT-LLM and NeMo Megatron frameworks. The result: Blackwell will double the compute and model sizes with new 4-bit floating point AI inference capabilities, according to the company.

Ian Buck, Nvidia’s GM/VP of Accelerated Computing, described Blackwell at a media briefing last week as a “new engine for industrial revolution, built to democratize trillion-parameter AI.”

“This GPU has 20 petaflops of AI performance on a single GPU, it delivers four times the training performance of Hopper (Nvidia’s previous-generation GPU architecture) at 30 times the inference performance, with overall 25 times better energy efficiency,” he said, adding that Blackwell will expand AI data center scale to beyond 100,000 GPUs.

He said Blackwell is a new class of AI superchip that combines the two largest possible dies unified as one GPU connected by a high bandwidth interface fabric operating at 10 terabytes a second “to combine these two GPUs, left and right dies together to build a holistic, one architecture.” This allows Blackwell to have 192 gigabytes of HBM 3D memory with over 8 terabytes a second of bandwidth, and 1.8 terabytes a second of NVLink.

“Because we’ve combined the left brain with the right brain, and through … the fabric which connects to every core, this unified GPU operates as one,” Buck said. “There’s no separation, no changes to the programmer on every CUDA program in existence today. It delivers the full performance of the entire architecture, without any compromises or multi-chip side effects.”

Nvidia also announced the 5th-Generation NVLink designed for multitrillion-parameter and mixture-of-experts AI models. It delivers 1.8TB/s bi-directional throughput per GPU for communication among up to 576 GPUs.

Blackwell-powered GPUs include a dedicated RAS Engine designed for reliability, availability and serviceability. Nvidia said the Blackwell architecture also adds capabilities at the chip level to utilize AI-based preventative maintenance to run diagnostics and forecast reliability issues. “This maximizes system uptime and improves resiliency for massive-scale AI deployments to run uninterrupted for weeks or even months at a time and to reduce operating costs,” the company said.

On the security front, Blackwell includes confidential computing capabilities intended to protect AI models and customer data, with support for new native interface encryption protocols for privacy-sensitive industries such as healthcare and financial services.

Blackwell offers a dedicated decompression engine that supports the multiple formats, accelerating database queries for data analytics and data science. “In the coming years, data processing, on which companies spend tens of billions of dollars annually, will be increasingly GPU-accelerated,” Nvidia said.

“For three decades we’ve pursued accelerated computing, with the goal of enabling transformative breakthroughs like deep learning and AI,” said Huang. “Generative AI is the defining technology of our time. Blackwell GPUs are the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry.”

Named in honor of David Harold Blackwell — a mathematician who specialized in game theory and statistics, and the first Black scholar inducted into the National Academy of Sciences — the new architecture succeeds the NVIDIA Hopper architecture, launched two years ago.

Nvidia also announced the GB200 Grace Blackwell Superchip, which connects two Nvidia B200 Tensor Core GPUs to the Arm-based Nvidia Grace CPU over a 900GB/s low-power chip-to-chip link. The company said the GB200 provides up to a 30x performance increase compared to the Nvidia H100 Tensor Core GPU for LLM inference workloads and reduces cost and energy consumption by up to 25x.

GB200-powered systems can be connected with the NVIDIA Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms, also announced today, which deliver networking at speeds up to 800Gb/s.

Nvidia said the GB200 is part of the Nvidia GB200 NVL72, a multi-node, liquid-cooled, rack-scale system designed for compute-intensive workloads. It combines 36 Grace Blackwell Superchips, which include 72 Blackwell GPUs and 36 Grace CPUs interconnected by fifth-generation NVLink.

Jensen Huang holds Blackwell, left, and Hopper chips at GTC 2024 keynote

GB200 NVL72 includes NVIDIA BlueField-3 data processing units for cloud network acceleration, composable storage, zero-trust security and GPU compute elasticity in hyperscale AI clouds. The platform acts as a single GPU with 1.4 exaflops of AI performance and 30TB of memory, and is a building block for the newest DGX SuperPOD, according to the company.

Nvidia also offers the HGX B200, a server board that links eight B200 GPUs through high-speed interconnects to support x86-based generative AI platforms. HGX B200 supports networking speeds up to 400Gb/s through Nvidia’s Quantum-2 InfiniBand and Spectrum-X Ethernet networking platforms.

Blackwell-based products are scheduled to be available from partners starting later this year.

Nvidia said AWS, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure will be among early cloud service providers to offer Blackwell instances, as will Applied Digital, CoreWeave, Crusoe, IBM Cloud and Lambda. Sovereign AI clouds will also provide Blackwell-based cloud services and infrastructure, including Indosat Ooredoo Hutchinson, Nebius, Nexgen Cloud, Oracle EU Sovereign Cloud, Oracle US, UK, and Australian Government Clouds, Scaleway, Singtel, Northern Data Group’s Taiga Cloud, Yotta Data Services’ Shakti Cloud and YTL Power International.

GB200 will also be available on NVIDIA DGX Cloud, an AI platform co-engineered with cloud service providers for developers to build generative AI models. AWS, Google Cloud and Oracle Cloud Infrastructure plan to host new these instances later this year.

Nvidia said Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo and Supermicro are expected to deliver servers based on Blackwell, as are Aivres, ASRock Rack, ASUS, Eviden, Foxconn, GIGABYTE, Inventec, Pegatron, QCT, Wistron, Wiwynn and ZT Systems.

Software makers, including engineering simulation companies Ansys, Cadence and Synopsys will use Blackwell-based processors for designing and simulating electrical, mechanical and manufacturing systems and parts.

The Blackwell product line is supported by Nvidia AI Enterprise, an operating system for production-grade AI that includes Nvidia NIM inference microservices — also announced today — as well as AI frameworks, libraries and tools that enterprises can deploy on NVIDIA-accelerated clouds, data centers and workstations.